Presenters

Source

Navigating the AI Highway: Choosing the Right Protocols for Real-time, Low-Latency Applications 🚀

Good afternoon, everyone! If you’re joining us after lunch, you know this slot can be tough, but we’re here to keep things exciting and insightful. Today, we’re diving deep into a fascinating challenge in the world of AI: how do we ensure our intelligent applications run with the lightning-fast speed and rock-solid reliability they demand?

Our expert panel, featuring Paris from Google Cloud, Indu from Wells Fargo, and Anal Krishnan Sva from Google, took us on a journey through the intricate world of communication protocols, revealing how they power everything from self-driving cars to secure financial transactions.

The Need for Speed: Why Real-time Matters in AI ⏱️

Imagine a self-driving car navigating a bustling city street. Every millisecond counts. The car’s perception system constantly processes data from various sensors – Lidar, cameras, range detection, object detection. If there’s even a slight delay in analyzing this real-time data, the consequences could be catastrophic. An emergency brake might not engage in time, leading to an accident. This isn’t just a hypothetical; it’s the core challenge AI applications face: they need to crunch vast amounts of data and act upon it without any perceptible latency.

Similarly, consider a fraudulent credit card transaction. If the system doesn’t detect and block a suspicious purchase within a few crucial seconds – often less than a minute – the money is lost. Multiple backend systems must communicate in perfect tandem, at high throughput and ultra-low latency, to analyze the transaction, assess risk, run model inferences, and return a score, all before the transaction is finalized.

This isn’t just about raw processing power; it’s fundamentally about how these systems talk to each other.

Decoding the Protocol Puzzle: gRPC, WebSockets, SSE & Beyond 💡

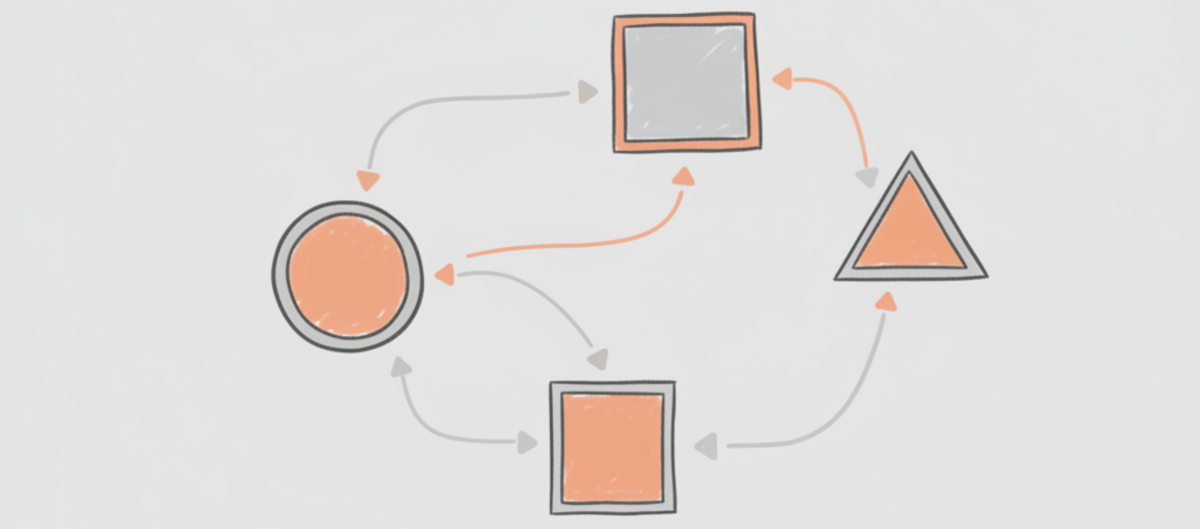

When building AI applications, developers face a critical choice: which communication protocol best suits their needs? The answer, as our speakers emphasized, is almost always a hybrid model. There isn’t one magic bullet; different use cases demand different approaches.

The audience shared insights into building AI applications, often focusing on inference platforms that serve various functions, from HTTP to gRPC to low-level WebSockets for streaming. This perfectly set the stage for understanding that different users have diverse requirements, leading to varied implementations.

Real-World AI in Action: Driving Cars & Fighting Fraud 🚗💳

Let’s zoom back into those critical examples:

- Self-Driving Cars: These vehicles rely on constant data streams from sensors. Imagine if HTTP/2’s Head-of-Line blocking prevented an emergency brake from applying! This scenario demands protocols like MQTT for IoT sensors or Server-Sent Events (SSE) for specific feeds like traffic light indications, allowing the car to plan its actions accordingly.

- Fraud Detection: For backend services like payment gateways, risk management systems, and location detection, gRPC shines. Its high throughput and low latency are ideal for these deterministic systems that require multiple services to talk in tandem at incredible speeds.

The HTTP/2 Head-of-Line Hurdle & HTTP/3’s Quick Fix 🛠️

A significant challenge with gRPC, which currently relies on HTTP/2, is the Head-of-Line (HoL) blocking issue. If a single TCP packet is dropped from one stream, the entire stream and subsequent streams are blocked until that packet is retransmitted. This is a critical impediment for real-time applications like fraud detection or autonomous vehicles.

The solution? Enter HTTP/3, powered by a fancy new protocol called QUIC. QUIC is built on UDP, but it wraps TCP and TLS functionalities, crucially removing HoL blocking. It achieves this by sending completely independent streams over a connection. If one stream experiences a packet loss, others remain unaffected, ensuring continuous data flow. This is a massive leap forward for latency-sensitive AI.

Furthermore, WebTransport emerges as a successor to WebSockets, leveraging HTTP/3 and QUIC to offer the best of both gRPC and WebSockets.

AI Assistants: A Hybrid Protocol Harmony 🤖🌐

Consider an AI assistant or chatbot. When an end-user sends a textual prompt from a client or browser to a gateway, it might use SSE or WebSockets. But once the request hits the internal architecture – the agent orchestrator communicating with a vector database or a model agent to stream tokens – that’s where gRPC bidirectional streaming becomes incredibly critical. It saves compute power, time, and offers greater tolerance for failures.

This illustrates the hybrid architecture: browser-facing interactions might use one set of protocols, while internal, high-performance, server-to-server communications use another.

Data Formats: Protocol Buffers vs. JSON – A Tale of Two Twins 📊💬

The choice of data format profoundly impacts performance:

- Protocol Buffers (Proto Buffs): These are ideal for backend services where contracts are well-defined and deterministic. Proto Buffs are binary, highly compressed, and significantly reduce memory footprint and latency. They are perfect for vector databases, which primarily deal with numerical data, as serialization and deserialization work much more efficiently with numbers.

- JSON: While heavier and introducing more latency due to its string-based nature, JSON is crucial for probabilistic systems like Large Language Models (LLMs). LLMs need to understand the data, especially in feedback loops where human-readable strings are essential for training and comprehension.

For scenarios where you need the benefits of both, gRPC JSON with a transcoding gateway can bridge the gap, translating JSON requests into Protobuff for gRPC servers.

Navigating the Trade-offs: Firewalls, Proxies & Load Balancing 🛡️⚖️

Choosing a protocol isn’t just about speed; it’s about the entire ecosystem:

- Browser Limitations: gRPC isn’t natively designed for browsers. Solutions like gRPC Web exist, using a client-side library and an Envoy proxy for HTTP/1 to HTTP/2 translation. However, this introduces a 30-40% overhead on the payload and spikes CPU cycles due to encoding/decoding. For browser-initiated actions, WebSockets are often a better fit due to native support and full-duplex connectivity, requiring no translation.

- Firewall Challenges: While HTTP/3 and QUIC offer significant performance gains, their UDP-based nature means legacy or enterprise firewalls might drop packets by default, making them impractical without proper network configuration.

- Security Concerns: Web Application Firewalls (WAFs) are crucial for security. However, they struggle to intercept binary gRPC streams or WebSockets, creating a “blind tunnel” that could allow attacks like SQL injection to pass through undetected. This demands alternative security measures at different layers.

- Load Balancing for AI: Traditional round-robin load balancing can lead to uneven distribution, overwhelming some servers while others are underutilized. For AI, a KV metric-based load balancing technique on the gateway layer ensures equal distribution, preventing queuing and improving the “first time to token” (PPFT) for AI responses.

- Resilience: gRPC offers backpressure flow control, allowing clients to pause incoming packets if overwhelmed. HTTP/3 and WebTransport introduce connection migration, maintaining a consistent connection ID even when a client switches networks (e.g., Wi-Fi to mobile data), ensuring uninterrupted streaming. In contrast, WebSockets can break connections when networks change due to their sticky nature.

Quick Q&A Snippets 💬

- gRPC Port Number: It’s not restricted to a specific port; it depends on how the application binds the server and client (e.g., 40000, or a common default like 8081).

- Choosing for Agentic AI: For vector databases (numbers, huge data), Proto Buffs are ideal due to compression and efficient serialization. For LLM feedback loops (strings, understandability), JSON is preferred. A transcoding gateway can enable a win-win by converting between formats.

The Journey Ahead for AI Protocols ✨

From the days of Apache Tomcat servers and manually configuring XML files to now, where we meticulously choose between sophisticated protocols for AI, it’s clear we’re on an incredible learning journey. The world of AI is complex, demanding not just powerful models but also intelligent plumbing to connect them efficiently.

The key takeaway is clear: there’s no single “best” protocol. The optimal solution is almost always a hybrid architecture that strategically combines gRPC, WebSockets, SSE, MQTT, and the emerging HTTP/3 and WebTransport, considering every factor from data format and latency requirements to network infrastructure and security. By understanding these nuances, we empower our AI applications to perform at their peak, driving innovation and shaping the future.

Let’s keep talking, keep sharing, and keep learning together!