Presenters

Source

🚀 Scaling AI Agents: How the MCP Marketplace and gRPC Unlock Organizational Intelligence

In the rapidly evolving world of Artificial Intelligence, an agent is only as good as the information it can access. Imagine a brilliant engineer who has no access to the company’s codebase, internal APIs, or documentation—they would be fundamentally limited. This is the exact challenge organizations face today when scaling AI agents.

At a recent tech deep-dive, Sumangal and Vashali from the platform team at Intuit shared a groundbreaking solution: the MCP Marketplace. By leveraging the Model Context Protocol (MCP) and gRPC, they have transformed a decentralized, complex problem into a streamlined, enterprise-grade platform.

📉 The Context Gap: Why Scaling AI Agents is Hard

While building a single AI agent is relatively straightforward, scaling them across an organization of thousands of developers reveals critical friction points. Without a unified strategy, organizations run into four major walls:

- Poor Discoverability: Without a central registry, teams often work in silos. This leads to duplicated efforts where multiple developers build the same context-fetching logic independently.

- Inconsistency and Risk: A lack of standards means there are no quality gates. Unverified or buggy services can feed incorrect context to LLMs, leading to unpredictable behaviors.

- Security and Auditing Gaps: Managing sensitive user credentials or Personal Access Tokens (PATs) becomes a nightmare. Without a central system, there is no systematic way to log who accessed what data.

- Operational Friction: Complex runtime configurations and manual setups create a steep learning curve, effectively killing developer adoption.

🏗️ Introducing the MCP Marketplace

To solve these challenges, the team built the MCP Marketplace. Think of it as the npm for AI capabilities. It serves as a central registry for discovering, installing, and managing context services.

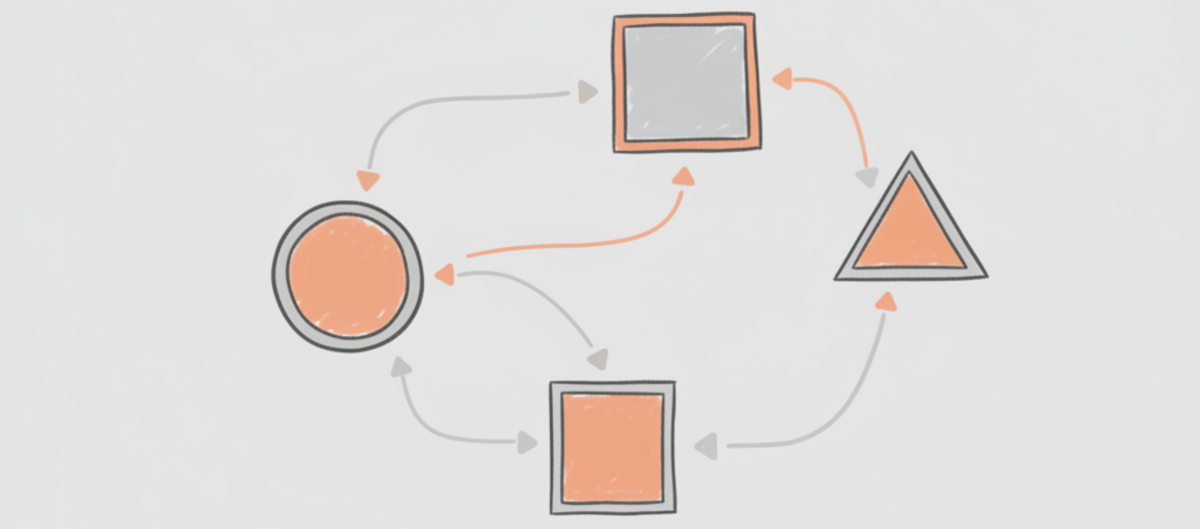

The platform caters to two distinct groups:

- Producers: Service teams who build and provide context.

- Consumers: Developers who use AI agents to accelerate their workflows.

🛠️ Streamlining the Producer Journey

For the teams building MCP services, the marketplace removes the infrastructure headache. The journey is powered by a robust CI/CD pipeline:

- Boilerplate Generation: Developers can automatically create a dedicated repository with pre-configured code.

- Automated Pipelines: Any code push triggers a pipeline that builds a Docker image.

- Security Scanning: The system performs comprehensive security scans to identify vulnerabilities before any code moves forward.

- Version Control: Images are tagged and stored in an enterprise Artifactory (Docker registry), ensuring that updates are handled through a clean, versioned release process.

This setup allows developers to focus strictly on functionality rather than infrastructure configuration.

🖱️ The Consumer Experience: One-Click Context

On the consumer side, the goal is to move from discovery to installation in seconds.

- Search and Discover: Developers browse a curated catalog of verified MCP services.

- One-Click Installation: Using a simple CLI command, the installer module pulls the necessary artifacts or retrieves service URLs.

- Secure Secret Management: The platform uses a native keychain/vault to store sensitive tokens. Secrets are never stored in local configuration files, preventing accidental exposure.

- Instant Configuration: The system automatically writes the necessary settings into the MCP config file, making the context immediately available to the AI agent.

📡 gRPC: The Architectural Backbone

The real magic happens behind the scenes with gRPC. The team uses a gRPC Middleware—essentially a powerful client-side interceptor—to act as the orchestrator for every session.

- Centralized Authentication: Before a request leaves the environment, the middleware intercepts the gRPC call and injects user authentication headers (via SSO or OAuth).

- Validation: On the server side, a gRPC interceptor validates the user’s identity. Only fully authorized requests are processed.

- Auditability: Because every request flows through the middleware, the system captures critical data points: tool usage frequency, service latency, and gRPC error codes.

- Secure Injection: The middleware retrieves tokens from the secure keychain and populates the execution environment of the target MCP, ensuring tokens stay off the local host machine.

📊 Measuring Success and Looking Ahead

You cannot improve what you do not measure. The marketplace utilizes an Analytics Collector to track:

- Adoption Trends: Which MCP services are gaining the most traction?

- Engagement: How often are specific tools being invoked by agents?

- Reliability: Real-time monitoring of latency and error rates to ensure a consistent developer experience.

What’s next? The team is looking into Advanced Semantic Search to match a developer’s intent to the right tool instantly, as well as community features like ratings and reviews to build a culture of trust.

❓ Frequently Asked Questions

Q: Is this marketplace intended for open-source MCP servers? A: Currently, the focus is on private, in-house MCP services developed specifically for use within the organization.

Q: Does the system only support stdio communication? A: Currently, the local environment uses stdio for the agent-to-CLI connection. However, the architecture is designed to be flexible; in a remote environment (like a pod), the agent could connect to the middleware using other protocols via gRPC.

✨ Final Thoughts

The MCP Marketplace represents a fundamental shift in how enterprises operationalize AI. By providing a secure, standardized, and discoverable bridge between LLMs and organizational knowledge, they have created a blueprint for truly context-aware AI at scale.

The future of AI isn’t just about bigger models—it’s about better context. 🌐💡🦾