Presenters

Source

Beyond the Hype: Your Smart Guide to AI Implementation 🚀

We’ve all seen the dazzling headlines and heard the promises of AI revolutionizing everything. But let’s be honest, not every problem needs a generative AI superhero. Sometimes, the most powerful move is knowing when and where to deploy AI. That’s exactly the kind of no-nonsense, strategic thinking we dove into at a recent tech conference, and we’re here to break it down for you!

The Core Question: Is AI Really the Answer? 🤔

The speaker kicked things off with a relatable, and frankly hilarious, example: the Humane AI Pin. Remember the hype? The promise of a “smartphone killer”? And then… well, we all know how that story unfolded. This isn’t a jab at innovation, but a stark reminder that generative AI isn’t always the solution. The real challenge, and the focus of this talk, is figuring out how do we know where and when to apply specific AI technologies? It’s about smart strategy, not just following the latest trend.

Mapping the AI Spectrum: Understanding “Where” and “When” 🗺️

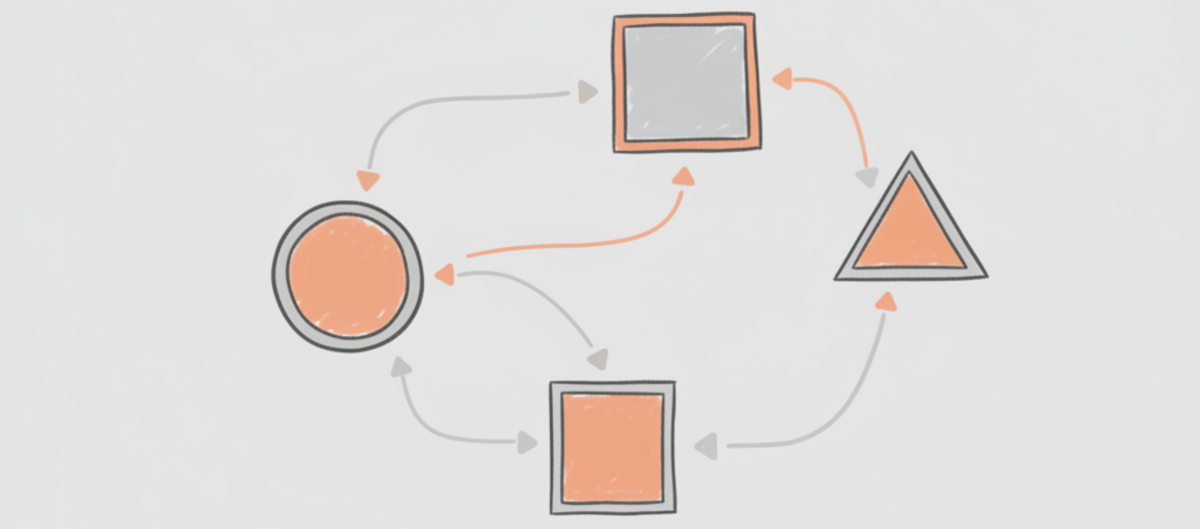

To navigate this complex landscape, the presentation introduced a brilliant framework based on two key dimensions: “Where” and “When.”

The “Where” Dimension: From Predictable to Creative 🎨

This dimension explores the nature of how AI systems operate. Think of it as a spectrum from absolute certainty to creative possibility:

- Deterministic Code: ✍️

- These are your classic computer programs. They’re predictable, always giving you the same output for the same input.

- Strengths: Consistency, precision.

- Limitations: Lacks flexibility.

- Probabilistic Models (Including Generative AI): ✨

- These are the deep learning models we often associate with AI. They’re inherently probabilistic, meaning their outputs can vary.

- Strengths: Creativity, handling ambiguity, human-like interactions.

- Challenges: Explainability and auditability can be tricky.

A key takeaway here is that using AI to generate deterministic code results in a deterministic runtime application, which is a fantastic way to preserve that crucial predictability. Plus, a single large language model can actually be tuned across this spectrum by adjusting its parameters!

The “When” Dimension: Training vs. Runtime ⏰

This dimension focuses on how we allocate AI’s powerful computing resources:

- Pre-training: 🧠

- This involves extensive training of models for specific tasks, leading to highly optimized and reusable models through transfer learning.

- Pro: Highly efficient for specific use cases.

- Con: The knowledge gained becomes static once training is complete.

- Runtime Inference: 💨

- This is all about real-time processing and reasoning. It allows for dynamic behavior and the use of current contextual information.

- Focus: Prompt engineering becomes key.

- Challenge: Maintaining stable system behavior can be demanding.

Ultimately, AI systems represent a delicate balance between training and inference. This balance can be a conscious strategic choice or a constraint dictated by your environment.

Strategic AI: Shaping Your “What” for Maximum Impact 🎯

By understanding when and where you’re deploying AI, you can strategically define what specific AI technologies you’ll use. This alignment has ripple effects across your entire operation:

End-User Functionality 🧑💻

- Deterministic systems: Offer unwavering consistency and precision.

- Probabilistic systems: Shine when handling ambiguity and delivering creative, human-like responses.

Technical Infrastructure & Platforms 🛠️

- Deterministic: Uses common tech stacks, easy to build and test, but scaling can be costly.

- Probabilistic: Fast to build and adaptive, but requires specialized testing and compute (think TPUs!). Pre-training can lead to optimized models and simpler deployment, but might lock you into proprietary stacks like NVIDIA’s. Runtime inference offers more compute flexibility, including edge devices, but demands hardware wherever models run.

Performance ⚡

- Deterministic: Resource-efficient and highly predictable.

- Probabilistic: Can be fast (like fraud detection), but are resource-intensive and can have “spiky” response times, especially reasoning models. Transformer models, the backbone of LLMs, have inherent N-squared complexity.

Reliability & Trustworthiness ✅

- Deterministic: Predictable and auditable – great for trust.

- Probabilistic: Can generalize and see through noise, but are imperfect and can lead to hallucinations. Pre-training builds reliability through testing, but risks outdated data and bias. Runtime inference allows grounding in data and chain-of-thought reasoning, but can introduce data privacy risks.

Compliance & Governance 📜

- Deterministic: Well-understood for compliance and audits.

- Probabilistic: Requires guardrails and safety techniques, but transparency remains a hurdle. Compliance for AI is a rapidly evolving space. Pre-training offers central control but lifecycle compliance can be tough. Runtime inference relies on dynamic filtering but poses data privacy risks.

Cost Considerations 💰

- Deterministic: Lower running costs, but higher development and maintenance expenses.

- Probabilistic: Consumption-based pricing sounds good, but it’s underpinned by very expensive GPU compute. Pre-training costs are amortized over inferences but require significant upfront investment. Runtime inference offers hardware choices but can involve premium rental capacity. And let’s not forget the environmental footprint of AI data centers!

Risk Management 🛡️

- Deterministic: Risks are well-understood, but human error persists.

- Probabilistic: Can distill intuition but introduces risks like bias and hallucinations. Pre-training risks lie in model accuracy and embedded bias. Runtime inference risks include cost control and data privacy.

Skill Sets & Talent 👨💻

- Deterministic skills: Abundant, but perhaps less in vogue.

- Probabilistic AI and data science: Education is booming, leading to a skills shortage. Pre-training needs rare data scientists. Runtime inference, particularly prompt and context engineering, offers a more accessible skill transfer for many. Agentic AI, a blend of deterministic components and runtime inference, is a particularly exciting area, bridging existing app development skills with new AI capabilities!

The Ultimate Takeaway: Strategic Placement for Maximum Value ✨

The presentation wrapped up with a powerful, unifying message: there is value across the entire AI spectrum. By understanding when and where you’re applying AI, you can strategically define what makes sense for your organization.

The guiding principle? “There is a place for everything. Everything in its place.”

The goal is to thoughtfully identify the right place and the right choice for the work that needs to be done. It’s about making informed, strategic decisions that drive real value, rather than just chasing the latest AI trend. Happy strategizing! 💡