Presenters

Source

Generative AI: The Sixth Great Shift in Software Delivery? 🚀

The world of software development is buzzing with the arrival of Generative AI, and it’s poised to be the sixth major shift in how we build and deliver software, following in the footsteps of higher-level languages, agile, cloud, DevOps, and microservices. But as with any powerful new tool, there are always unintended consequences to consider.

Charles Humble, host of the GoTo Podcast’s “State-of-the-Art” miniseries, sat down with Nathan Harvey, leader of Google Cloud’s DORA team, to unpack the profound impact of AI on software delivery. DORA, a decade-long research initiative, has been diligently studying the metrics, capabilities, and conditions that drive optimal software delivery performance. Their annual global survey, touching nearly 5,000 organizations, offers invaluable insights into what makes teams deliver faster, more stably, and efficiently.

DORA Metrics: Evolving Beyond Trade-offs, Embracing Tandem Growth 📈

For years, DORA has tracked key metrics to understand software delivery performance. This year, the metrics have evolved. We now have five core DORA metrics, emphasizing that throughput and stability aren’t opposing forces, but rather complementary pillars of high performance. High-performing teams excel at both.

Here’s a look at the expanded DORA metrics:

Throughput 💨

- Lead Time for Changes: How long it takes from a code commit to getting it into production.

- Deployment Frequency: How often you’re releasing updates to your live environment.

Stability 🛡️

- Failed Deployment Recovery Time: How quickly you can get things back on track after a broken deployment.

- Change Failure Rate: The percentage of deployments that cause issues requiring immediate attention.

- Deployment Rework Rate: A new metric measuring deployments that need fixing, including unplanned rollbacks.

The research is clear: high throughput and high stability go hand-in-hand.

Harvey suggests a powerful two-step approach to leverage these metrics:

- Baseline with Metrics: Start by measuring your current performance using the five DORA metrics.

- Assess Capabilities: Dive deeper by evaluating underlying capabilities like fostering a learning culture, building fast feedback loops, ensuring quality documentation, streamlining change approvals, and embracing continuous integration.

- Formulate Hypotheses & Experiment: Use DORA’s findings to hypothesize improvements (e.g., “Improving our CI practices will boost delivery performance”) and then test these hypotheses in your unique context.

Generative AI: An Amplifier of Systems, Revealing Hidden Bottlenecks 💡

A fascinating, and initially concerning, finding from the 2024 DORA report showed that as AI adoption increased, both stability and throughput declined. While the 2025 report indicates a rebound in throughput, instability unfortunately persists. Harvey points to two main culprits:

- The Learning Curve: Any new technology comes with an adjustment period, and AI is no different. Initial dips in performance are to be expected.

- Bottleneck Misalignment: This is the crucial insight. If AI is focused on speeding up code generation (often not the bottleneck), it can overwhelm downstream processes like testing, review, and deployment. This paradoxically harms overall system performance.

Harvey emphasizes the paramount importance of systems thinking. Optimizing individual developer productivity without considering the entire delivery pipeline is a recipe for disaster. He urges organizations to align software delivery metrics with business metrics and investigate any divergence as a prime opportunity for improvement.

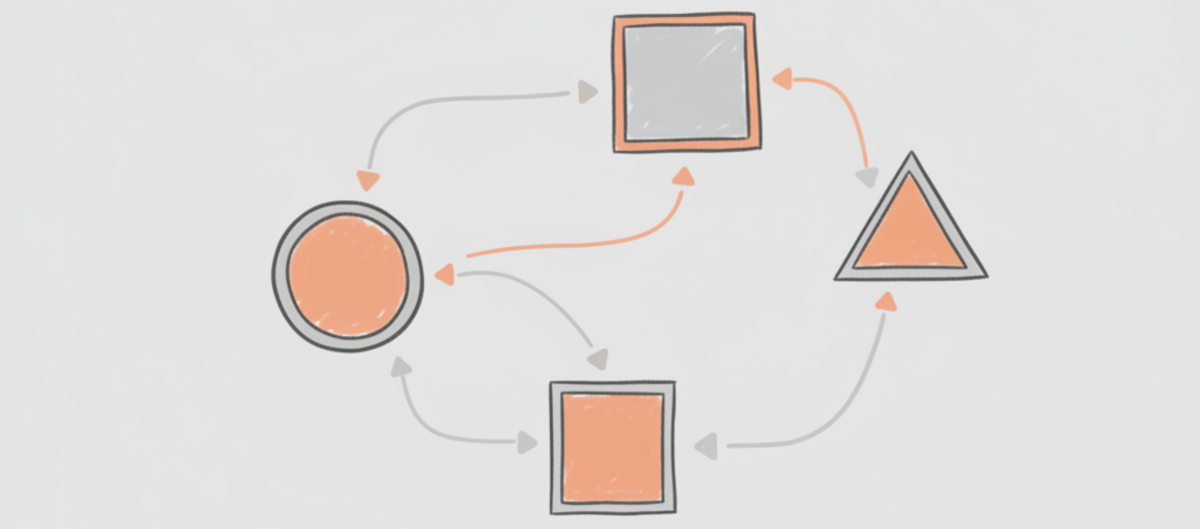

Think of AI as a powerful amplifier. In healthy, well-oiled delivery pipelines, AI accelerates the flow. But in systems with existing friction, AI amplifies those pain points. For example, if code review is a bottleneck, faster code generation will just create a bigger backlog at the review stage. This highlights a key takeaway: AI’s benefit might be greater when applied to the code review process itself, rather than solely to code authorship.

Foundational Capabilities for Scaling AI Success 🛠️

To truly harness the power of AI and scale its adoption effectively, organizations need to cultivate seven key foundational capabilities:

- Clear AI Stance: Have a defined policy for how AI will be used.

- Robust Data Ecosystem: Ensure AI has access to high-quality, well-connected data.

- Internal Data Access: AI needs to be able to leverage your organization’s own data.

- Strong Version Control: Frequent check-ins of AI-generated code are vital for rollback capabilities.

- Working in Smaller Batches: Break down changes to maximize AI’s impact.

- User-Centric Focus: Use AI to directly support user needs and create delightful experiences.

- Effective Internal Platform: A strong internal platform acts as a force multiplier for AI adoption.

The Documentation Dilemma & The Trust Paradox 🤔

In a surprising turn, the 2024 report revealed that AI adoption actually improved documentation quality, not quantity! This is likely due to AI’s ability to generate initial drafts and summarize complex information effectively.

However, despite reported productivity gains and perceived quality improvements, a significant trust paradox has emerged. In 2025, a whopping 30% of respondents reported little or no trust in AI output. This skepticism is fueled by personal experiences with LLMs fabricating information and unreliable API features. Harvey acknowledges this challenge, stressing the critical need for users to critically evaluate AI-generated content and validate its accuracy.

The AI Trust Paradox: Navigating Expertise & Ownership in a Changing Landscape 🌐

This segment dives deep into the evolving relationship between developers and AI, highlighting a critical “trust paradox” and reshaping our understanding of expertise and ownership.

Productivity Soars, But Confidence Lags 📉

While developers are experiencing significant productivity boosts and improved code quality with AI assistance, their trust levels lag far behind. In 2025, only a mere 4% of respondents placed high trust in AI, with a substantial 30% expressing little or no confidence. Harvey advocates for a balanced approach, warning against both blind faith and outright dismissal. True trust, he argues, is built when AI consistently meets expectations, even if those expectations are for “mediocre responses.” The current tendency for AI to prioritize user satisfaction by providing direct answers, without critically evaluating their validity, can create a false sense of reliability.

Code Ownership: A Developing Frontier 👨💻

A fundamental question arises: who truly owns code generated with AI’s help? Early research suggests that developers’ feelings of ownership remain relatively stable, but this is an area that demands deeper investigation as AI integration becomes more pervasive.

The Shifting Value of Skills: Evolution, Not Extinction 🦾

The fear that AI might devalue human skills is understandable. With AI capable of generating UX, code, and documentation, questions arise about the future of specialists like UX designers, SREs, and technical writers. While some roles may indeed diminish, mirroring historical technological shifts, AI also creates new avenues for skill acquisition. Individuals can become “novices” across various domains.

Crucially, AI does not obviate the need for deep expertise. A software engineer using AI still relies on other engineers. A product manager using AI for prototyping still needs expert technical writers. AI democratizes skill acquisition, but it does not replace the profound expertise that seasoned professionals bring to the table. The nuanced understanding and communication prowess of an expert technical writer, for example, are capabilities AI currently cannot replicate.

The Future of Software Delivery: Adapting to Evolving “Physics” 📡

Looking ahead, we can anticipate a “J-curve of transformation,” similar to the adoption of Agile, DevOps, and Cloud. This involves an initial dip in productivity, followed by eventual improvements. The key challenge lies in navigating this dip and accelerating the upward trend.

The core tenets of software delivery—writing, validating, approving, shipping, and monitoring code—will likely persist. However, the “physics of software delivery” will undoubtedly evolve. Systemic thinking is paramount, as complex systems involving human and technological interactions will exhibit emergent behaviors. Critical thinking and experimentation will be our guiding lights in harnessing AI’s potential.

The message is clear: AI is here to stay. Organizations are championing it from the top down, and practitioners are embracing it at the grassroots level. While the exact form of AI in five years is uncertain, its deep integration into our workflows is inevitable.

Evolving Metrics and Strategic AI Integration 🎯

DORA metrics will continue to adapt to reflect these changing practices, with the capacity for safe and frequent application changes remaining a crucial indicator of quality.

For team leaders looking to integrate AI, a two-pronged approach is recommended:

- Adapt Current Systems: Employ value stream mapping to thoroughly understand your existing processes, identify bottlenecks, and pinpoint areas ripe for AI injection. This collaborative exercise fosters a shared understanding and is often more valuable than the resulting artifact.

- Evolve Towards AI-Native Thinking: While “AI-native” software delivery is still largely conceptual, it’s essential to begin envisioning the development and management of applications with AI at their core.

The journey ahead is a collaborative one. As the speaker put it, let’s all “go figure that out together.”